Toolkit

From Research to Real-World Impact. Discover the TUPLES project outcomes: 27 public scientific software releases (including Tuples Lab and Scikit-Decide), a self-assessment tool for planning and scheduling, and a dedicated competition platform. Explore the publications page to learn more about the impressive scientific work produced by the consortium.

Scientific Software

Explore the scientific software, benchmark tools, and open frameworks developed within the TUPLES project — from hybrid planning and safety verification to explainability and real-world deployment.

The TUPLES project has made its solving algorithms openly available on different repository. There are 27 software that falls into three main categories:

Hybrid Planning and Scheduling

Frameworks integrating learning and optimization: GOOSE, Numeric ASNets, BubbleGraph PyConA,Tempo, plus methods for decision-focused learning and graph-based scheduling.

Safety and Robustness

Tools such as BugHive, Veritas, and OC-SCORE enable testing, verification, and protection against adversarial attacks, ensuring trustworthy real-world deployment.

Trust in Explanations

Solutions for explainable constraint solving, MUGS computation, and step-wise explanations, helping users understand and trust AI decisions.

Together, these resources provide the scientific and technical foundations developed during the project.

You can access them here .

The TUPLES Lab is a library to simulate and evaluate different KPIs of simplified versions of the project’s use cases using a high-level API.

The library allows both building and, accordingly, using a wide range of benchmark problems sharing the same structure.

It is designed for two kinds of users:

- programmers, who can build a new benchmark (or a group of such) leveraging the internal API

- final users, who want to test their algorithms and models on the proposed benchmarks.

The lab enables investigations that would be impossible on many of the original use cases. For example, one may be able to evaluate the robustness and safety of a system against adjustable degrees of uncertainty.

You can access the TUPLES LAB here.

Scikit-Decide is a library initially developed by Airbus for Planning, Scheduling and Reinforcement Learning. It provides a common framework and bridges between these 3 different areas of sequential decision-making.

Domains can be described by their distinctive features, which are exploited by the different solver classes provided in the library.

Scikit-Decide significantly relies on Discrete-Optimization, another library developed by Airbus, to solve combinatorial optimization problems. It can found at the following location.

Some algorithms developed in TUPLES are being integrated in Scikit-Decide and Discrete-Optimization, such as MUS computations and using GNNs with RL to

solve PDDL problems.

Scikit-Decide was also used as a domain descriptor for the TUPLES Beluga Challenge, as it provides a seamless bridge between PDDL and RL models.

The Scikit-Decide can be accessed here.

Use case Demonstrators

For each of its use cases, the TUPLES project developed demonstrators that showcase how its novel hybrid-AI techniques can operate in real-world settings. See also the detailed description of each use case here.

The workforce production, allocation, and scheduling problem focuses on helping the planner optimize both the scheduling and allocation of logistics activities and by explaining the proposed solution to planners.

One particularly complex case of manufacturing allocation is the Airbus Beluga cargo operation. The combination of the aircraft’s size, the unique loading and unloading procedures, and the challenges of air traffic management make it an exceptional use case. This scenario was at the core of the workforce allocation competition promoted by TUPLES.

The Flight Diversion Assistant use case consists in generating and proposing to the pilot different diversion routes to alternate airports when the original nominal route cannot be flown anymore. Flight diversion assistants will help the pilots take better strategic decisions and let them concentrate on piloting the aircraft instead.

The squad management use-case is about being able to evaluate the current roster of a professional football team, and to plan for the coming 6-12 months ahead. Essentially, this means the use-case is about making decisions with regards to (1) contract extensions, (2) buying and selling players and (3) attributing a certain number of minutes to a given player.

The Waste collection use-case is about managing the routes of the trucks that collect waste bins in a given city, and bring the collected waste to the treatment plants, in the most efficient way. As such,the solution should provide the human planner with:

• Minimum number of trucks to service the entire city;

• Area to be covered by each truck;

• Detailed driving instructions for each trip.

The Energy management use-case is about managing the cogeneration (or trigenera tion) plants that produce the heating (and, in case, also the cooling) needed by a number of users served by a District Heating Network, while selling electricity to the network operator.

Self Assessment Tool

A web-based diagnostic survey that supports the evaluation of trustworthiness in AI systems for Planning & Scheduling. Developed from EU guidelines and refined through consortium feedback.

The TUPLES self-assessment tool translates abstract principles into clear, actionable recommendations on robustness, safety, transparency, and accountability.

It is aimed at developing verification and explanation methods capable of reasoning about the properties of the solutions produced by planning and scheduling systems, in particular when these are represented by neural networks.

The TUPLES self-assessment tool is an independent tool that increases awareness of best practices and guidelines on Human-centered AI.

Whether you are developing AI-driven planning tools, assessing third-party systems, or seeking alignment with EU best practices, explore how to enhance the trustworthiness of your AI solutions.

Try it here.

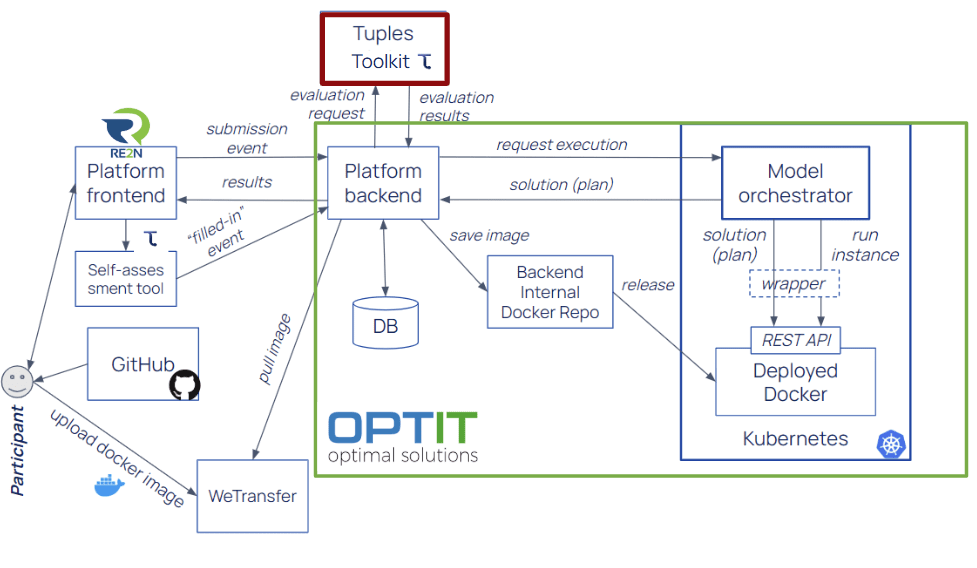

Competition Platform

A Next-Gen Environment for AI & Decision-Making Challenges. Designed to evaluate how algorithms behave and adapt in realistic scenarios.

The Competition Platform is a next-generation tool for AI and decision-making challenges, designed to evaluate how algorithms behave, strategize, and adapt in realistic scenarios (and not only to measure prediction accuracy).

Beyond Accuracy → Evaluates algorithms’ behavior, strategy, and logic

Enterprise-Ready → Secure environments with hybrid evaluation

Scalable & Flexible → Automated, repeatable pipelines, custom metrics

Customizable Workflows → Tailored to problem-specific needs and R&D

Already validated in the Airbus Beluga™ Competition, it has proven its scalability and effectiveness