Human-Centric Planning at XAI 2025: IPEXCO and the Future of Explainable Decision Support

-

15/07/2025

-

NewsNews

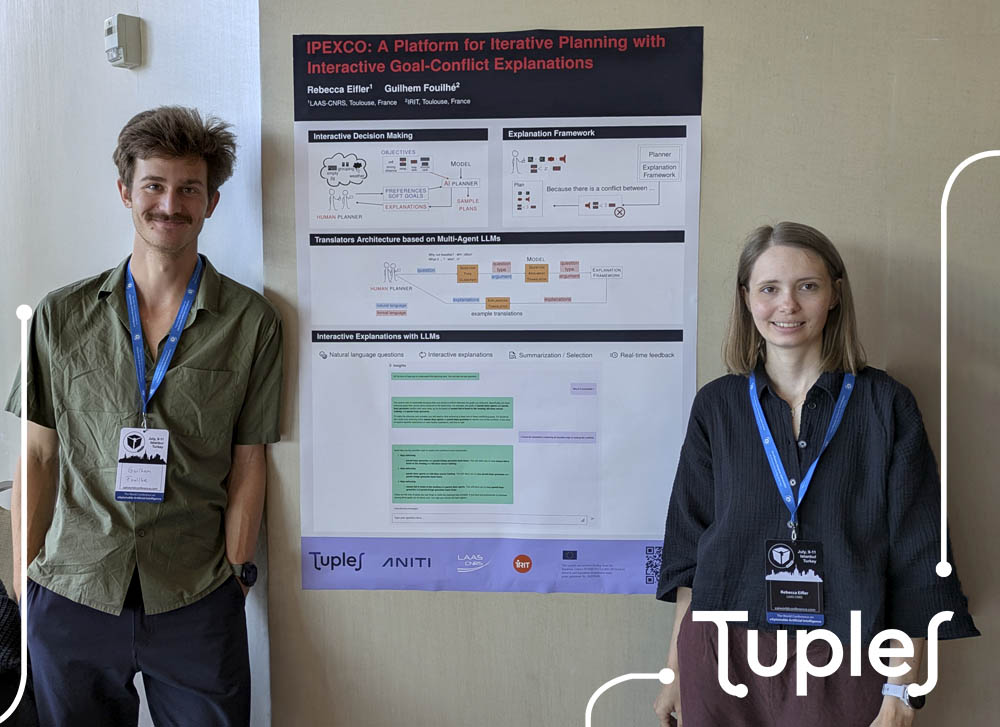

IPEXCO is designed to assist human planners in complex decision-making scenarios where multiple, often conflicting objectives must be reconciled. The system combines an automated planner with a powerful explanation framework capable of translating internal conflict reasoning into user-friendly natural language feedback.

From Conflict to Clarity: The Role of LLMs

A key innovation of the platform is its use of a multi-agent architecture built on large language models (LLMs). This enables IPEXCO to interpret natural language questions from users—such as “Why is this plan not feasible?” or “What would happen if…?”—and respond with clear, contextual explanations about constraints and trade-offs in the planning process.

Interactive and Iterative Planning

The tool supports iterative, human-in-the-loop decision making, offering real-time feedback and allowing users to refine their goals and preferences interactively. The ability to expose and explain soft-goal conflicts makes IPEXCO a valuable resource in domains where explainability, trust, and adaptability are paramount.

We congratulate Rebecca and Guilhem on this important presentation and encourage everyone to explore the poster for a deeper look into the future of explainable decision support.